ResNet-Matconvnet

Cifar Experiments

This is a tutorial of reproducing the experimental results in “Deep Residual Learning for Image Recognition”,Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun using MatConvNet. The MatConvNet re-implementation of Deep Residual Network can be found here.

:x: I have stopped maintaining this repo. For fine-tuning ResNet, I would suggest using Torch version from Facebook repo.

- Table of Contents

ResNet

The Deep Residual Network has achieved state-of-the-art results in image classification and detection, winning the ImageNet and COCO competitions. The core idea of deep residual network is adding “shortcut connections” to convolutional neural networks. The shortcuts bypass several stacked layers by performing identity mapping. The shortcuts are added together with the output of stacked layers. One residual unit has several stacked layers and a shortcut. The key contribution of the deep residual network is to address the degradation issue, which is with the network depth increasing, accuracy gets saturated and then degrades rapidly. The deep residual learning can benefit from simply increasing the depth.

One Residual Unit in MatConvNet |

Implementation of Residual Unit: the output is given by |

Get Started

The code relies on vlfeat, and matconvnet, which should be downloaded and built before running the experiments. You can use the following commend to download them.

git clone --recurse-submodules https://github.com/zhanghang1989/ResNet-Matconvnet.git

If you have problem with compiling, please refer to Compiling VLFeat and MatConvNet.

Cifar Experiments

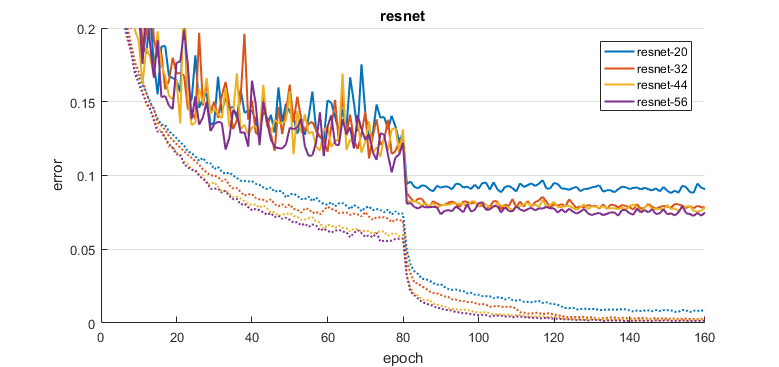

- Reproducing Figure 6 from the original paper.

run_cifar_experiments([20 32 44 56 110], 'plain', 'gpus', [1]);

run_cifar_experiments([20 32 44 56 110], 'resnet', 'gpus', [1]);

Reproducing the experiments in Facebook blog. Removing ReLU layer at the end of each residual unit, we observe a small but significant improvement in test performance and the converging progress becomes smoother.

res_cifar(20, 'modelType', 'resnet', 'reLUafterSum', false,...

'expDir', 'data/exp/cifar-resNOrelu-20', 'gpus', [2])

plot_results_mix('data/exp','cifar',[],[],'plots',{'resnet','resNOrelu'})

ImageNet

- download the dataset to

data/ILSVRC2012and follow the instructions insetup_imdb_imagenet.m.

run_experiments([18 34 50 101 152], 'gpus', [1 2 3 4 5 6 7 8]);

Fine-tune Your Own

- Download

- the models to

data/models: imagenet-resnet-50-dag , imagenet-resnet-101-dag , imagenet-resnet-152-dag - the datasets to

data/: Material in Context Database (minc)

- the models to

- Fine-tuning

res_finetune('datasetName', 'minc', 'datafn',...

@setup_imdb_minc, 'gpus',[1 2]);

Compiling VLFeat and MatConvNet

- Compiling vlfeat

MAC>> make

If you are using Windows, you should update Makefile.mak based on your MSVCROOT and WINSDK version. An example can be found here. Please use MSVR terminal to compile it.

Windows>> nmake /f Makefile.mak

Using Ubuntu, you can download the pre-build binary file here.

- Compiling Matconvnet (Install the compatible

cudnnversion to the CUDA root. Use the following commend and update the matlab version and cuda root.)

MAC>> make ARCH=maci64 MATLABROOT=/Applications/MATLAB_R2016a.app \

ENABLE_GPU=yes CUDAROOT=/Developer/NVIDIA/CUDA-7.5 \

CUDAMETHOD=nvcc ENABLE_CUDNN=yes CUDNNROOT=local/

Windows>> vl_compilenn('enableGpu', true, ...

'cudaRoot', 'C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.5',...

'cudaMethod', 'nvcc','enableCudnn', true);

Ubuntu>> vl_compilenn('enableGpu', true, ...

'cudaRoot', '/usr/local/cuda',...

'cudaMethod', 'nvcc','enableCudnn', true);

, where

, where  is the input and

is the input and  is the transformation of stacked layers. If the input and output layers have different dimensions, the shortcut is given by a linear combination of the inputs

is the transformation of stacked layers. If the input and output layers have different dimensions, the shortcut is given by a linear combination of the inputs  .

.